At CSAIL, the Computer Science and Artificial Intelligence Laboratory at the renowned Massachusetts Institute of Technology, Brandon Carter and colleagues conducted a study that showed that neural networks trained on popular datasets like CIFAR-10 and Image Net suffer from over-interpretation. The latter could have dramatic consequences in healthcare or autonomous driving.

The Massachusetts Institute of Technology is a famous American university in the state of Massachusetts, located in Cambridge. Founded in 1861 to meet the need for engineers, the institute will become multidisciplinary, it is now a center of scientific and technical research of international renown from which many Nobel prizes have emerged. The CSAIL or MIT Computer Science and Artificial Intelligence Laboratory was born from the merger of the Laboratory for Computer Science (LCS) and the Artificial Intelligence Laboratory (AI Lab) in 2003 and is part of the Schwarzman College of Computing.

The study team

The study was conducted by Brandon Carter, currently CTO of Think Therapeutics but then a student at CSAIL, focused on machine learning, with the support and guidance of David Gifford, a professor of electrical and computer engineering and biological engineering at MIT. Among other things, Gifford developed interpretability methods for understanding deep neural networks and designing therapies using ML. Amazon scientists Siddhartha Jain and Jonas Mueller rounded out the team whose work was presented at the 2021 Neural Information Processing Systems conference last December.

The study

Machine learning, and more specifically deep learning, enables computer systems to learn from data through model training and algorithm design. Image recognition is based on convolutional neural networks (CNN) which will match a label to an image provided as input and which are currently the most efficient classification models. Even if they were built in the image of the human brain, CNNs are an enigma: why do they make this decision rather than another?

Over-interpretation

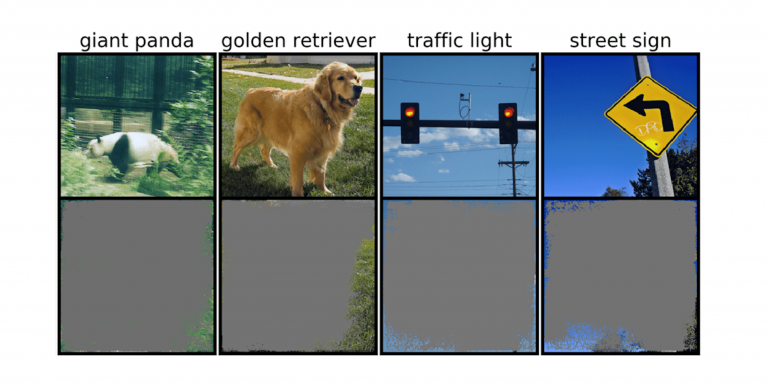

The photos used for training models include the image of the object that concerns the learning but also the environment in which it is located: thus, a traffic light will be on a post, on the side of a road, depending on the time when the picture was taken, the sky will have a different brightness… The team found that neural networks trained on popular datasets such as CIFAR-10 and Image Net suffered from overinterpretation. Models trained on CIFAR-10, for example, made reliable predictions even when 95 percent of the input images were missing, something a human brain could not have achieved. Brandon Carter, a PhD student at MIT’s Computer Science and Artificial Intelligence Laboratory, said:

“Overinterpretation is a dataset problem caused by these nonsense signals in datasets. Not only are these high-reliability images unrecognizable, but they contain less than 10% of the original image in unimportant areas, such as edges. We found that these images made no sense to humans, but the models could still classify them with high confidence.”

According to the study, “a deep image classifier can determine image classes with more than 90% confidence using primarily image edges, rather than an object itself.” This result could be very encouraging if it was only used in domains like gaming for example, but on the other hand, for medicine or autonomous cars, it is alarming knowing that any wrong decision can have tragic consequences… The example given is that of “a self-driving car that uses a machine learning method to recognize stop signs, you can test this method by identifying the smallest subset of input that constitutes a stop sign. If it’s a tree branch, a particular time of day, or something that’s not a stop sign, you might be concerned that the car will stop where it’s not supposed to.” Brandon Carter explains:

“There’s the question of how we can modify the datasets in a way that would allow the models to be trained to more closely mimic how a human would think about image classification and therefore hopefully generalize better in these real-world scenarios, such as autonomous driving and medical diagnosis, so that the models don’t have this nonsensical behavior.”

The conclusion of the study is that it would make sense to present models with images of objects with a non-informative background…

Translated from Selon une étude de chercheurs du MIT, les CNN souffrent de surinterprétation